kafka-producer-perf-test compression-codec|kafka producer perf : ODM Nevertheless, we have tested the behavior with all Kafka compression codecs (snappy, lz4, gzip and zstd) and with different compression levels, using a significant sample of your data. We have seen a massive .

Resultado da KKKL Express is a reputable bus operator providing top quality express bus service within Malaysia and Singapore. Popular destinations include Kuala Lumpur, Malacca, Penang and Singapore. .

{plog:ftitle_list}

Resultado da Estes conselhos de apostas no ténis completam uma oferta já rica e diversificada da SOSapostas, com prognósticos grátis em futebol, .

How To Tune The Apache Kafka Producer Client: Use the producer-perf-test tool to view the throughput and other metrics

thc dropped in drug test

There are several compression types available, including none (the default), gzip, lz4, snappy, and zstd (introduced in Kafka 2.1). Compression works by consolidating repeated values, thereby.In this tutorial, learn how to How to optimize your Apache Kafka producer application for throughput, set configuration parameters and test baseline using kafka-producer-perf-test .run tests: bin/kafka-producer-perf-test --topic test-rep-one --num-records 50000000 --record-size 100 --throughput 50000000 --producer.config etc/kafka/producer.properties. bin/kafka .

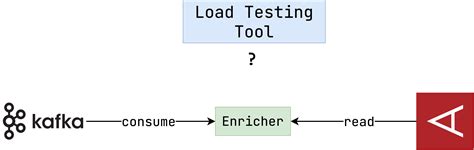

performance testing in kafka

Apache Kafka Message Compression. Kafka supports two types of compression: producer-side and broker-side. Compression enabled producer-side doesn’t require any configuration . Nevertheless, we have tested the behavior with all Kafka compression codecs (snappy, lz4, gzip and zstd) and with different compression levels, using a significant sample of your data. We have seen a massive .

Follow the step-by-step tutorial How to optimize your Kafka producer for throughput that demonstrates how to use kafka-producer-perf-test to measure baseline performance and . Based on our own test results, enabling compression when sending messages using Kafka can provide great benefits in terms of disk space utilization and network usage, .Strategies for handling large messages in Kafka, such as compression and message segmentation: Operating System Optimization: Optimizing the operating system for Kafka performance, including file system tuning, network settings, and kernel parameters . Kafka built-in tools like kafka-producer-perf-test.sh and kafka-consumer-perf-test.sh scripts.Advanced Kafka Producer Tutorial about message compression and the how producer-level message compression works in Apache Kafka. . Compression enabled broker-side (topic-level) with the setting compression.type: if the compression codec of the destination topic is set to compression.type=producer, .

kafka producer perf

kafka producer config

In the below conditions you need to configure batch.size, linger.ms & compression.type properties in your kafka prodocer to increase the performance. 1) If records are arriving faster than the kafka producer can send. 2) If you have huge amount of data in the your respective Topic, its really burden to your kafka producer.

Compression¶ To optimize for throughput, you can also enable compression on the producer, which means many bits can be sent as fewer bits. Enable compression by configuring the compression.type parameter which can be set to one of the following standard compression codecs: lz4 (recommended for performance) snappy; zstd; gzip

kafka-producer-perf-test.sh - We will add a csv option to this to dump incremental statistics in csv format for consumption by automated tools. kafka-consumer-perf-test.sh - Likewise we will add a csv option here. jmx-dump.sh - This will just poll the kafka and zookeeper jmx stats every 30 seconds or so and output them as csv. . Compression .

I have this command: ./bin/kafka-producer-perf-test.sh --broker-list=localhost:9092 --messages 10000000 --topic test --threads 10 --message-size 1000 --batch-size 100 --compression-codec 1 But I would like to run that but with text, that one just send empty messages. What's the difference between the following ways of enabling compression in kafka: Approach 1: Create a topic using the command: bin/kafka-topics.sh --create --zookeeper localhost:2181 --config compression.type=gzip --topic test Approach 2: Set the property compression.type = gzip in Kafka Producer Client API.If the compression codec is anything other than NoCompressionCodec, enable compression only for specified topics if any. If the list of compressed topics is empty, then enable the specified compression codec for all topics. If the compression codec is NoCompressionCodec, compression is disabled for all topics. zk.read.num.retries: 3 If Kafka producer compression is set (e.g. to gzip), and the broker configuration is also set to the same codec, will the broker re-compress any messages from the producer, or recognise that its the same codec and skip and broker-side re-compression? I'm aware that the broker can be configured to inherit broker codec via the 'producer' setting.

Nevertheless, we have tested the behavior with all Kafka compression codecs (snappy, lz4, gzip and zstd) and with different compression levels, using a significant sample of your data. We have seen a massive improvement when setting the producer’s compression to zstd and high compression levels. Max throughput: current: ~9 million records . I'm a newbie for Kafka, using the version of kafka_2.8.0-0.8.1.1. After build Kafka brokers and test it by producer and consumer that work fine, I decide to make some performance test for Kafka. I

Specify the final compression type for a given topic. This configuration accepts the standard compression codecs ('gzip', 'snappy', lz4). It additionally accepts 'uncompressed' which is equivalent to no compression; and 'producer' which means retain the original compression codec set by the producer. string: producer: high: delete.topic.enableKafka精进 | Producer端核心参数及调优建议 . 4. compression.type. 参数说明:表示生产端是否对消息进行压缩,默认值为none,即不压缩消息。压缩可以显著减少网络IO传输、磁盘IO以及磁盘空间,从而提升整体吞吐量,但也是以牺牲CPU开销为代价的。

kafka-producer-perf-test.sh - We will add a csv option to this to dump incremental statistics in csv format for consumption by automated tools. kafka-consumer-perf-test.sh - Likewise we will add a csv option here. jmx-dump.sh - This will just poll the kafka and zookeeper jmx stats every 30 seconds or so and output them as csv. . Compression .Key. The message key is used to decide which partition the message will be sent to. This is important to ensure that messages relating to the same aggregate are processed in order. For example, if you use an orderId as the key, you can . Compression. A Kafka producer can be configured to compress messages before sending them to brokers. The compression.type setting specifies the compression codec to be used. Supported compression codecs are “gzip,” “snappy,” and “lz4.” Compression is beneficial and should be considered if there's a limitation on disk capacity.

If the compression codec is anything other than NoCompressionCodec, enable compression only for specified topics if any. If the list of compressed topics is empty, then enable the specified compression codec for all topics. If the compression codec is NoCompressionCodec, compression is disabled for all topics. zk.read.num.retries: 3

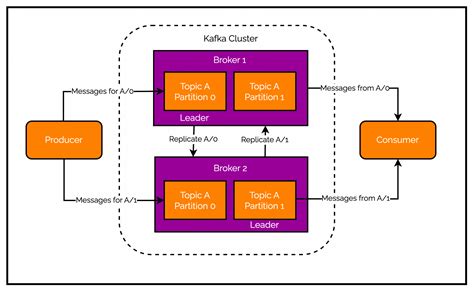

kafka load tester

When you start to analyze producer metrics to see how the producers actually perform in typical production scenarios, you can make incremental changes and make comparisons until you hit the sweet spot. If you want to read more about performance metrics for monitoring Kafka producers, see Kafka’s Producer Sender Metrics. Common compression codecs are GZIP, Snappy, LZ4 and ZStandard. Idempotent Writes. . Kafka Producer Performance Tuning. There are several important configuration options that can tune the producer for low latency vs maximum throughput: Low Latency acks=0 linger.ms=0 batch.size=16384 compression.type=none. Compression: Compression is the technique of reducing the size of data by removing redundancy or using encoding schemes. Compression can improve the performance and efficiency of Kafka by reducing network bandwidth usage, disk space usage, and CPU utilization. Kafka supports several compression codecs such as gzip, snappy, lz4, zstd, etc.Concepts¶. The Kafka producer is conceptually much simpler than the consumer since it does not need group coordination. A producer partitioner maps each message to a topic partition, and the producer sends a produce request to the leader of that partition. The partitioners shipped with Kafka guarantee that all messages with the same non-empty key will be sent to the same .

This will begin a console consumer listening to the new_orders topic which we can use to visualise our test.. Performance Test The Producer. Next, we will simulate a series of records at high volume, pushing them into the same new_orders topic using the kafka-producer-perf-test.sh script.. Various command line parameters can be specified to configure the test and .

When defined on a producer side, compression.type codec is used to compress every batch for transmission, and thus to increase channel throughput. At the topic (broker) level, compression.type defines the codec used to store data in Kafka log, i.e. minimize disk usage. Special value producer allows Kafka to retain original codec set by producer. Figure 1: Spring Boot application overview. The client sends a REST POST request to the application to trigger sending a number of events. The REST Controller calls the Kafka producer to send the . The default is none (i.e. no compression). Valid values are none, gzip, snappy, lz4, or zstd. So you can set in producer configs. ProducerConfig.COMPRESSION_TYPE_CONFIG "snappy" or by using properties . spring.kafka.producer.compression-type= # Compression type for all data generated by the producer.

Testing in real world scenario showed how benchmarks, even coming from zstd itself, can be misleading. Going beyond codecs built into Kafka allowed us to improve compression ratio 2x at very low cost. We hope that the data we gathered can be a catalyst to making Zstandard an official compression codec in Kafka to benefit other people. The size of the messages that can be possibly compressed within Kafka depends on the specific compression codec employed and the Kafka configuration settings. In general, Kafka allows data messages to be compressed up to a total size of one gigabyte. . How To Tune The Apache Kafka Producer Client: Use the producer-perf-test tool to view the .

WEBUPDATED: December 08, 2021 07:45. Starting on Wednesday December 8th at 10 AM Pacific Time the 2K launcher will be receiving an update - that means a few games will need to be updated too, in order to work in the Steam or Epic Games platform after that point. Mafia Definitive Edition.

kafka-producer-perf-test compression-codec|kafka producer perf